Zero10 Brings Web Widget, Generative AI for Virtual Fashion Try-ons

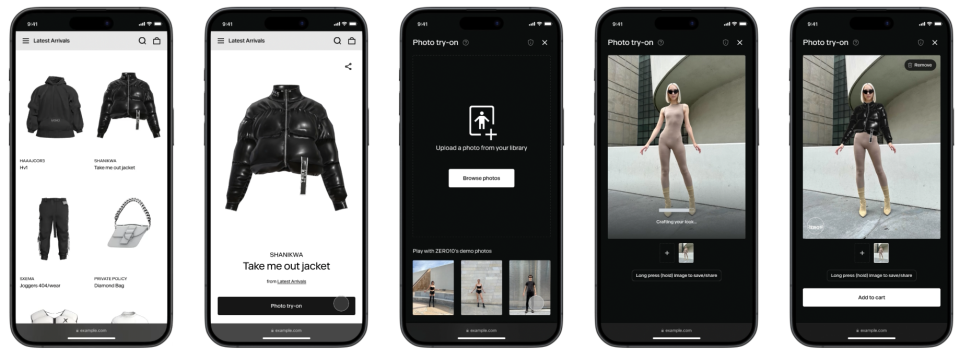

Zero10, the New York City-based augmented-reality developer known in fashion circles for its work with Maisie Wilen, Coach, Nike and many more, is expanding its virtual try-on tech. On Thursday, the company introduced a new AR web widget to bring the experience to e-commerce, while it also beta tests a new generative AI spin that may radically simplify what it takes for brands to offer the feature.

The web widget allows brand partners to add Zero10’s AR try-ons to their websites and apps, so users can upload selfie pics and, within 5 seconds, see how they’d look in a given outfit. The company envisions various contexts for this tool, like allowing gamers to try on their favorite avatar looks in real life. In fashion, the obvious scenario is to help shoppers make purchase decisions, which could minimize returns. But such try-ons may also help gauge consumer demand by using a digital prototype prior to production, whether at scale or on-demand. Zero10’s strategy is based on 3D model production.

More from WWD

Catering to both physical retail and online shopping across its AR solutions, the company noted that its models can be integrated into AR Mirrors, its own Zero10 app or used on the web for online try-ons.

Though not as mature as the web widget, the AI-based virtual try-on could end up being the more intriguing tool.

Generative AI — a form of artificial intelligence that excels at creating text, music, art and other content — exploded this year, prompting brands, fashion designers, artists and others to dabble with different approaches. Some of the main business areas tend to revolve around marketing, advertising, product design and customer service. But virtual try-on may be a fascinating destination for the tech.

The goal of Zero10’s research and development is to use the tech to streamline the effort that goes into virtual try-ons. The company’s existing technology requires the creation of 3D models of virtual garments by fashion designers, but in the new process, AI can enable the experience based on a small set of still images, from one to five clothing photos and a single image of the human subject.

“At the start of our research, we needed up to 10 photos of the target garment for virtual try-on. Although it was relatively straightforward to collect these images for our test dataset, obtaining 10 photos of a single clothing item at scale turned out to be extremely challenging,” Alexey Borisov, Zero10’s chief technology officer, and engineer Ilya Zakharkin co-wrote in a blog post last week that covered the research.

“We found that some e-commerce websites only have one photo of a clothing item. Therefore, we developed a pipeline that requires only a single image of the garment,” they said. The post is a fascinating read for anyone interested in warping modules (which warp the virtual garment to adapt to the body), refinement networks (which make the try-on image look realistic) and the impact of visual generative AI tools like DALL-E, Imagen, Stable Diffusion and Midjourney, which solved some issues but brought new ones.

Ultimately, the machine learning team arrived at solutions that combined aspects of the previous approaches and new tools to solve each other’s challenges, while addressing backgrounds, realistic lighting, high resolution and more. The result drastically changed the technical pipeline, taking the required 5 to 10 clothing images down to just one.

Now Zero10 is beta testing the solution. This means the tech isn’t widely available yet. But it also means that, for retailers and brands with massive catalogs filled with stock keeping units — which likely saw the complexities of virtual try-on to be prohibitive at that volume — easier access to this shopping feature may be available soon.

Best of WWD