AI can spot tuberculosis early by listening to your cough

The same underlying technology powering massively popular generative AI models like from large tech firms like OpenAI is now being used to scan for early signs of lung disease. Google, one of the leaders in new AI models, is partnering with a healthcare startup that’s analyzing vast datasets of coughs and sneezes to detect signs of tuberculous or other respiratory diseases before they get worse. It’s one of numerous ways the quickly evolving technology is rapidly reshaping early detection of disease across the healthcare industry. What happens once that initial diagnosis is made, however, still requires quintessential human clinical expertise.

Google’s vast database of coughs and stuffy noses

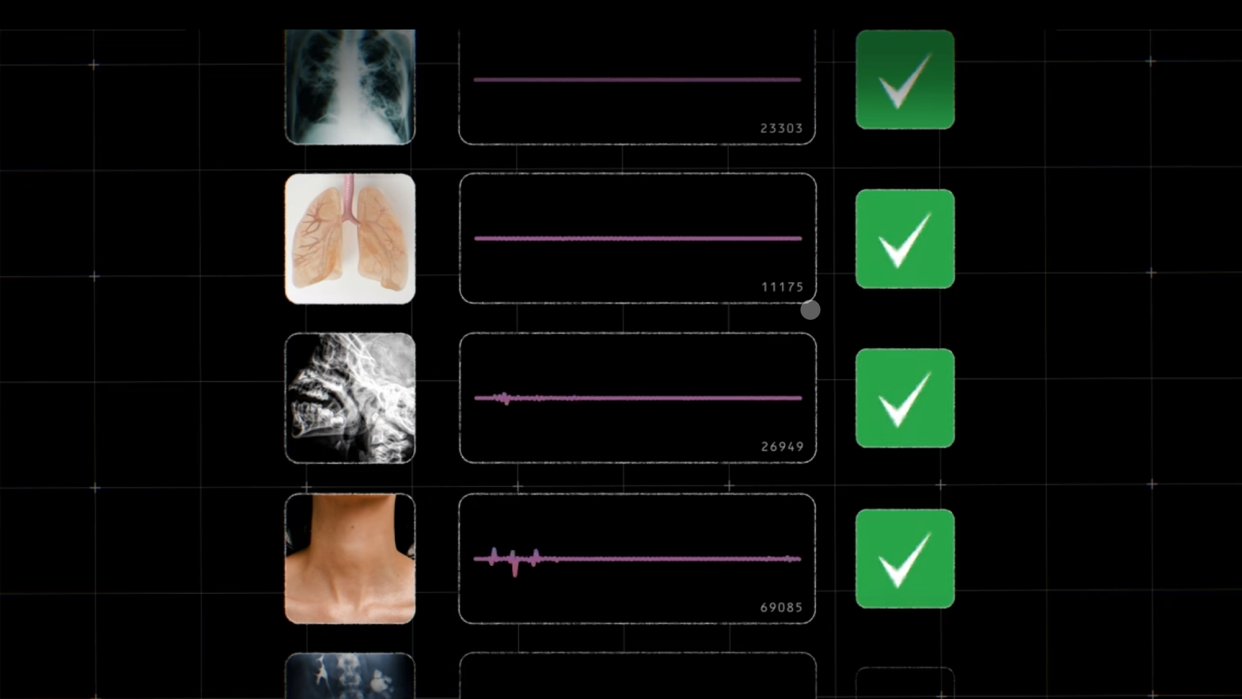

Earlier this year, Google released details about a new healthcare self-supervised, deep-learning model they dubbed Health Acoustics Representation (HeAR). The model was trained on around 300 million, two-second long audio snippets that include people coughing, sneezing, breathing, and sniffling. This diverse set of audio samples was reportedly harvested from non-copyrighted public data from around the world. For a sense of scale, the cough model alone was trained on 100 million different cough sounds. All of this data, in theory, should show patterns of what a healthy respiratory system sounds like. The trained AI model can then use that knowledge to look for anomalies in a new audio sample provided by a patient that could point to a potential health risk.

More recently, Google announced in a blog post it had begun working with an India-based respiratory healthcare startup called Salcit Technologies to apply those findings in the real world in order to look for early signs of tuberculosis. Bloomberg reported on the partnership this week. Salcit has its own product, called Swaasa, which lets users record an audio file of them coughing using their mobile device’s microphone. An AI model then compares that audio against a database of coughs to look for indicators or the deadly, but treatable disease. From there patients can then decide whether they want to seek out a doctor for further treatment. By merging their own model with HeAR, the two companies expect they can increase the effectiveness and accuracy of the product for early respiratory illness detection. 1.3 million people globally reportedly died of tuberculosis in 2022. India accounts for nearly 25% of those deaths annually.

AI’s predictive properties are helping healthcare professionals detect various types of diseases faster. Research has already shown these models can prove effective in screening for potential cancerous tumors that may otherwise go undetected. Similar models are also being used to look for early signs of breast cancer, look for early signs of breast cancer, myopia, and heart disease. Radiologists are already using GenAI tools to speed up the pace of medical imaging analyses. AI’s impact on diagnoses may even extend beyond chronic conditions normally spotted later in life. Just last year, researchers from the University of Louisville created an AI system they say can parse MRI scans of toddlers to predict, with 98.5% accuracy, whether or not they would be clinically diagnosed with autism.