Microsoft president compares artificial intelligence to Terminator, says "we better have a way to slow down or turn off AI"

What you need to know

Microsoft President Brad Smith recently discussed AI in an interview, covering topics such as regulation, if artificial intelligence will replace jobs, and what controls need to be put in place.

Smith emphasized that Copilot is a tool and likened it to the printing press or other technological developments that people adapted to use.

The Microsoft president also discussed the "existential threat to humanity" that many bring up when discussing AI and what needs to be done to prevent possible issues.

Artificial intelligence is one of the hottest topics of 2024, and Microsoft is all in on the technology. The tech giant has invested billions of dollars into AI and is working to incorporate some form of artificial intelligence into all of its software. Microsoft President Brad Smith recently spoke about AI in an extensive interview with EL PAíS.

The entire interview is worthwhile, as it provides unique insight into the potential for AI to advance society and what's being done to mitigate potential threats. While AI has many worthy uses, it's also used for cyberattacks. Smith touched on everything from if AI will replace employees to how to stop the plot of Terminator from happening in real life.

Regulating AI

Smith has said several times that Microsoft should help drive regulation over AI. He reiterated that sentiment and drove the point home with an analogy.

"When we buy a carton of milk in the grocery store, we buy it not worrying about whether it’s safe to drink, because we know that there is a safety floor for the regulation of it," said Smith.

"If this is, as I think it is, the most advanced technology on the planet, I don’t think it’s unreasonable to ask that it have at least as much safety regulation in place as we have for a carton of milk."

Specifically, Smith touched on the European AI Act and similar legislation in the United States and United Kingdom.

While Smith values regulations, he warned of "onerous administration that would drive up costs." Microsoft is the most valuable company in the world, so it won't struggle to deal with administration — a point made by Smith — himself but startups could be hurt by poorly made regulation.

Putting an emergency brake on AI

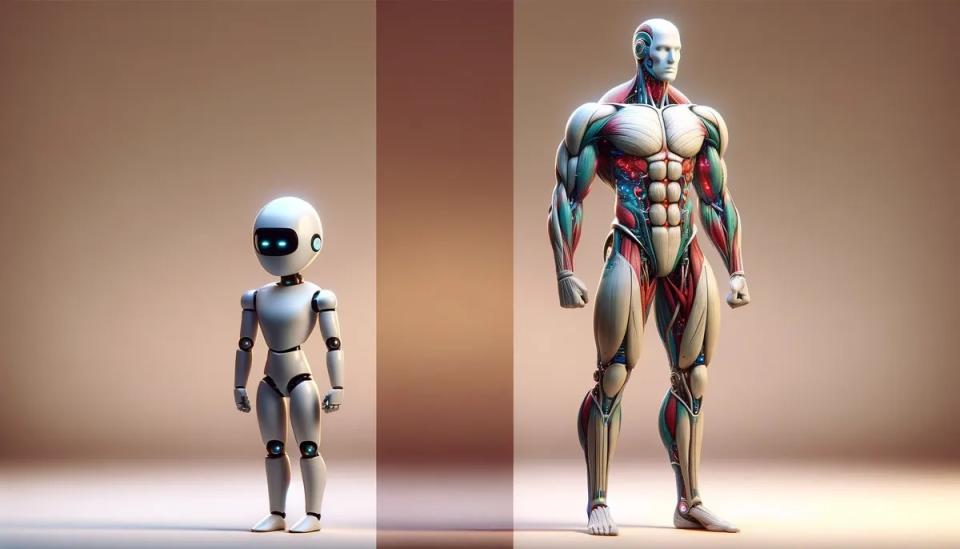

One concern about AI is that it will either reach a point of sentience or become intelligent enough to harm humanity. While that may sound like the stuff of science fiction, there are genuine threats about how much power and control AI can develop. Smith covered this toward the end of the interview.

Smith explained that we can solve today's problems and tomorrow's problems now, and that there's no need to wait. He added that buses and trains have emergency brakes, so a similar concept makes sense for AI.

"This is intended to address what people often describe as an existential threat to humanity, that you could have runaway AI that would seek to control or extinguish humanity. It’s like every Terminator movie and about 50 other science fiction movies. One of the things that is striking to me after 30 years in this industry is often life does imitate art," said Smith.

"It’s amazing that you can have 50 movies with the same plot: a machine that can think for itself decides to enslave or extinguish humanity, and humanity fights back and wins by turning the machine off. What does that tell you? That we better have a way to slow down or turn off AI, especially if it’s controlling an automated system like critical infrastructure."

Who guards the guardians?

I don't know many arguing against having at least some level of control and regulation over AI. But the age-old question of "who guards the guardians" remains. Microsoft has a vested interest in AI, so how much of a role should it play in what's regulated or limited? Politicians have a variety of motives, not all good, so it's not like full control over AI should be handed over to governments.

As is the case with any advanced technology, organizations, experts, and governing bodies need to come together. There will be tension, pushing and pulling to expand or retract the influence of AI, but that's better than leaving either side go unfettered.