Nvidia's Project GROOT brings the human-robot future a significant step closer

The age of humanoid robots could be a significant step closer thanks to a new release from Nvidia.

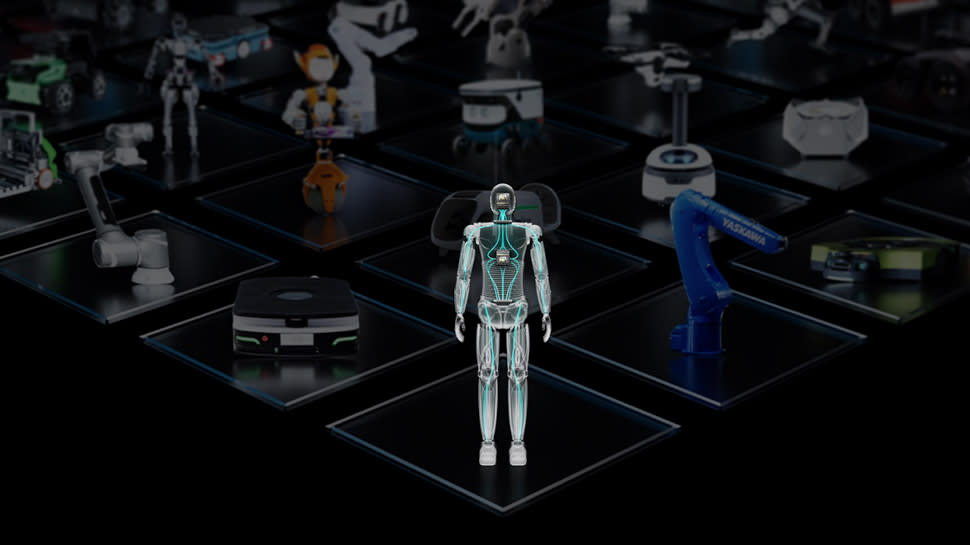

The computing giant has announced the launch of Project GROOT, its new foundational model aimed at helping the development of such robots in industrial use cases.

Revealed at Nvidia GTC 2024, the company says its new launch will enable robots to be smarter and more functional than ever before - and they'll do so by watching humans.

We are GROOT?

Announcing the launch of Project GROOT (standing for "Generalist Robot 00 Technology") on stage at Nvidia GTC 2024, Jensen Huang, company founder and CEO revealed robots powered by the platform will be designed to understand natural language and emulate movements by observing human actions.

This will allow them to quickly learn coordination, dexterity and other skills in order to navigate, adapt and interact with the real world - and definitely not lead to a robot uprising at all.

Huang went on to show off a number of demos which saw Project GROOT-powered robots carry out a number of tasks, from XXX, showing their possibility.

“Building foundation models for general humanoid robots is one of the most exciting problems to solve in AI today,” Huang said. “The enabling technologies are coming together for leading roboticists around the world to take giant leaps towards artificial general robotics.”

The scale of importance for Project GROOT was also highlighted by the fact Nvidia has built a new computing system, Jetson Thor, specifically designed for humanoid robots.

The SoC includes a GPU based on the latest Nvidia Blackwell architecture, which includes a transformer engine able to delivering 800 teraflops of AI performance, allowing them to run multimodal generative AI models like GR00T.

The company also revealed upgrades to its Nvidia Isaac robotics platform, designed to make robotic arms smarter, more flexible and more efficient than ever - making them a much more appealing choice for factories and industrial use cases across the world.

This includes new collections of robotics pretrained models, libraries and reference hardware aimed at helping faster learning and better efficiency.